目录

- - 下载地址

- - 配置环境变量

- - 启动

- vim ./start.sh

- vim ./stop.sh

- queries.yaml

- - 配置prometheus

- 下载地址

https://github.com/prometheus-community/postgres_exporter/releases

- 配置环境变量

- 启动

因启动前需要配置环境变量后再进行启动

运行如下语句:

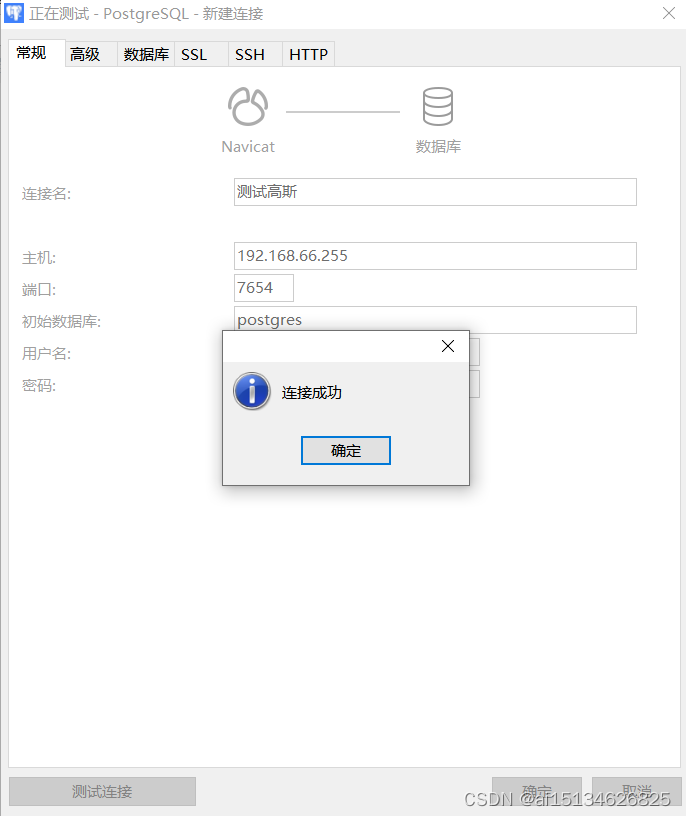

export DATA_SOURCE_NAME=postgresql://user:userpwd@0.0.0.0:5432/db?sslmode=disable./postgres_exporter --extend.query-path queries.yaml --log.level debug

故为了方便辨认 我更改了它的端口,并编了./start.sh和./stop.sh两个脚本方便以后启动,语句如下:

vim ./start.sh

export DATA_SOURCE_NAME=postgresql://user:userpwd@0.0.0.0:5432/db?sslmode=disable

nohup ./postgres_exporter --log.level debug --web.listen-address=:15432 > ./startlog.log 2>&1 & # --extend.query-path queries.yaml

vim ./stop.sh

#!/bin/sh

echo "stop"

#!/bin/bash

PID=$(ps -ef | grep postgres_exporter | grep -v grep | awk '{ print $2 }')

if [ "${PID}" ]

then

echo 'Application is stpping...'

echo kill $PID DONE

kill $PID

else

echo 'Application is already stopped...'

fi

./start.sh启动脚本内:

–web.listen-address为监听的端口

–extend.query-path为自定义查询的文件

queries.yaml

无需要可忽略。

pg_replication:

query: "SELECT CASE WHEN NOT pg_is_in_recovery() THEN 0 ELSE GREATEST (0, EXTRACT(EPOCH FROM (now() - pg_last_xact_replay_timestamp()))) END AS lag"

master: true

metrics:

- lag:

usage: "GAUGE"

description: "Replication lag behind master in seconds"

pg_postmaster:

query: "SELECT pg_postmaster_start_time as start_time_seconds from pg_postmaster_start_time()"

master: true

metrics:

- start_time_seconds:

usage: "GAUGE"

description: "Time at which postmaster started"

pg_stat_user_tables:

query: |

SELECT

current_database() datname,

schemaname,

relname,

seq_scan,

seq_tup_read,

idx_scan,

idx_tup_fetch,

n_tup_ins,

n_tup_upd,

n_tup_del,

n_tup_hot_upd,

n_live_tup,

n_dead_tup,

n_mod_since_analyze,

COALESCE(last_vacuum, '1970-01-01Z') as last_vacuum,

COALESCE(last_autovacuum, '1970-01-01Z') as last_autovacuum,

COALESCE(last_analyze, '1970-01-01Z') as last_analyze,

COALESCE(last_autoanalyze, '1970-01-01Z') as last_autoanalyze,

vacuum_count,

autovacuum_count,

analyze_count,

autoanalyze_count

FROM

pg_stat_user_tables

metrics:

- datname:

usage: "LABEL"

description: "Name of current database"

- schemaname:

usage: "LABEL"

description: "Name of the schema that this table is in"

- relname:

usage: "LABEL"

description: "Name of this table"

- seq_scan:

usage: "COUNTER"

description: "Number of sequential scans initiated on this table"

- seq_tup_read:

usage: "COUNTER"

description: "Number of live rows fetched by sequential scans"

- idx_scan:

usage: "COUNTER"

description: "Number of index scans initiated on this table"

- idx_tup_fetch:

usage: "COUNTER"

description: "Number of live rows fetched by index scans"

- n_tup_ins:

usage: "COUNTER"

description: "Number of rows inserted"

- n_tup_upd:

usage: "COUNTER"

description: "Number of rows updated"

- n_tup_del:

usage: "COUNTER"

description: "Number of rows deleted"

- n_tup_hot_upd:

usage: "COUNTER"

description: "Number of rows HOT updated (i.e., with no separate index update required)"

- n_live_tup:

usage: "GAUGE"

description: "Estimated number of live rows"

- n_dead_tup:

usage: "GAUGE"

description: "Estimated number of dead rows"

- n_mod_since_analyze:

usage: "GAUGE"

description: "Estimated number of rows changed since last analyze"

- last_vacuum:

usage: "GAUGE"

description: "Last time at which this table was manually vacuumed (not counting VACUUM FULL)"

- last_autovacuum:

usage: "GAUGE"

description: "Last time at which this table was vacuumed by the autovacuum daemon"

- last_analyze:

usage: "GAUGE"

description: "Last time at which this table was manually analyzed"

- last_autoanalyze:

usage: "GAUGE"

description: "Last time at which this table was analyzed by the autovacuum daemon"

- vacuum_count:

usage: "COUNTER"

description: "Number of times this table has been manually vacuumed (not counting VACUUM FULL)"

- autovacuum_count:

usage: "COUNTER"

description: "Number of times this table has been vacuumed by the autovacuum daemon"

- analyze_count:

usage: "COUNTER"

description: "Number of times this table has been manually analyzed"

- autoanalyze_count:

usage: "COUNTER"

description: "Number of times this table has been analyzed by the autovacuum daemon"

pg_statio_user_tables:

query: "SELECT current_database() datname, schemaname, relname, heap_blks_read, heap_blks_hit, idx_blks_read, idx_blks_hit, toast_blks_read, toast_blks_hit, tidx_blks_read, tidx_blks_hit FROM pg_statio_user_tables"

metrics:

- datname:

usage: "LABEL"

description: "Name of current database"

- schemaname:

usage: "LABEL"

description: "Name of the schema that this table is in"

- relname:

usage: "LABEL"

description: "Name of this table"

- heap_blks_read:

usage: "COUNTER"

description: "Number of disk blocks read from this table"

- heap_blks_hit:

usage: "COUNTER"

description: "Number of buffer hits in this table"

- idx_blks_read:

usage: "COUNTER"

description: "Number of disk blocks read from all indexes on this table"

- idx_blks_hit:

usage: "COUNTER"

description: "Number of buffer hits in all indexes on this table"

- toast_blks_read:

usage: "COUNTER"

description: "Number of disk blocks read from this table's TOAST table (if any)"

- toast_blks_hit:

usage: "COUNTER"

description: "Number of buffer hits in this table's TOAST table (if any)"

- tidx_blks_read:

usage: "COUNTER"

description: "Number of disk blocks read from this table's TOAST table indexes (if any)"

- tidx_blks_hit:

usage: "COUNTER"

description: "Number of buffer hits in this table's TOAST table indexes (if any)"

# WARNING: This set of metrics can be very expensive on a busy server as every unique query executed will create an additional time series

pg_stat_statements:

query: "SELECT t2.rolname, t3.datname, queryid, calls, total_time / 1000 as total_time_seconds, min_time / 1000 as min_time_seconds, max_time / 1000 as max_time_seconds, mean_time / 1000 as mean_time_seconds, stddev_time / 1000 as stddev_time_seconds, rows, shared_blks_hit, shared_blks_read, shared_blks_dirtied, shared_blks_written, local_blks_hit, local_blks_read, local_blks_dirtied, local_blks_written, temp_blks_read, temp_blks_written, blk_read_time / 1000 as blk_read_time_seconds, blk_write_time / 1000 as blk_write_time_seconds FROM pg_stat_statements t1 JOIN pg_roles t2 ON (t1.userid=t2.oid) JOIN pg_database t3 ON (t1.dbid=t3.oid) WHERE t2.rolname != 'rdsadmin'"

master: true

metrics:

- rolname:

usage: "LABEL"

description: "Name of user"

- datname:

usage: "LABEL"

description: "Name of database"

- queryid:

usage: "LABEL"

description: "Query ID"

- calls:

usage: "COUNTER"

description: "Number of times executed"

- total_time_seconds:

usage: "COUNTER"

description: "Total time spent in the statement, in milliseconds"

- min_time_seconds:

usage: "GAUGE"

description: "Minimum time spent in the statement, in milliseconds"

- max_time_seconds:

usage: "GAUGE"

description: "Maximum time spent in the statement, in milliseconds"

- mean_time_seconds:

usage: "GAUGE"

description: "Mean time spent in the statement, in milliseconds"

- stddev_time_seconds:

usage: "GAUGE"

description: "Population standard deviation of time spent in the statement, in milliseconds"

- rows:

usage: "COUNTER"

description: "Total number of rows retrieved or affected by the statement"

- shared_blks_hit:

usage: "COUNTER"

description: "Total number of shared block cache hits by the statement"

- shared_blks_read:

usage: "COUNTER"

description: "Total number of shared blocks read by the statement"

- shared_blks_dirtied:

usage: "COUNTER"

description: "Total number of shared blocks dirtied by the statement"

- shared_blks_written:

usage: "COUNTER"

description: "Total number of shared blocks written by the statement"

- local_blks_hit:

usage: "COUNTER"

description: "Total number of local block cache hits by the statement"

- local_blks_read:

usage: "COUNTER"

description: "Total number of local blocks read by the statement"

- local_blks_dirtied:

usage: "COUNTER"

description: "Total number of local blocks dirtied by the statement"

- local_blks_written:

usage: "COUNTER"

description: "Total number of local blocks written by the statement"

- temp_blks_read:

usage: "COUNTER"

description: "Total number of temp blocks read by the statement"

- temp_blks_written:

usage: "COUNTER"

description: "Total number of temp blocks written by the statement"

- blk_read_time_seconds:

usage: "COUNTER"

description: "Total time the statement spent reading blocks, in milliseconds (if track_io_timing is enabled, otherwise zero)"

- blk_write_time_seconds:

usage: "COUNTER"

description: "Total time the statement spent writing blocks, in milliseconds (if track_io_timing is enabled, otherwise zero)"

pg_process_idle:

query: |

WITH

metrics AS (

SELECT

application_name,

SUM(EXTRACT(EPOCH FROM (CURRENT_TIMESTAMP - state_change))::bigint)::float AS process_idle_seconds_sum,

COUNT(*) AS process_idle_seconds_count

FROM pg_stat_activity

WHERE state = 'idle'

GROUP BY application_name

),

buckets AS (

SELECT

application_name,

le,

SUM(

CASE WHEN EXTRACT(EPOCH FROM (CURRENT_TIMESTAMP - state_change)) <= le

THEN 1

ELSE 0

END

)::bigint AS bucket

FROM

pg_stat_activity,

UNNEST(ARRAY[1, 2, 5, 15, 30, 60, 90, 120, 300]) AS le

GROUP BY application_name, le

ORDER BY application_name, le

)

SELECT

application_name,

process_idle_seconds_sum as seconds_sum,

process_idle_seconds_count as seconds_count,

ARRAY_AGG(le) AS seconds,

ARRAY_AGG(bucket) AS seconds_bucket

FROM metrics JOIN buckets USING (application_name)

GROUP BY 1, 2, 3

metrics:

- application_name:

usage: "LABEL"

description: "Application Name"

- seconds:

usage: "HISTOGRAM"

description: "Idle time of server processes"

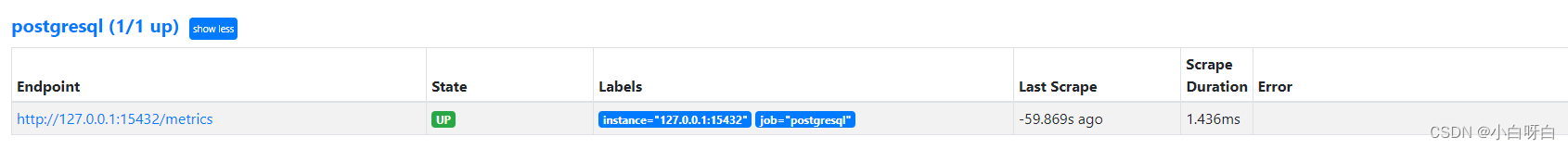

- 配置prometheus

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

static_configs:

- targets: ["127.0.0.1:9090"]

#labels:

#instance: prometheus

- job_name: postgresql

static_configs:

- targets: ['127.0.0.1:15432']

#labels:

#instance: postgresql